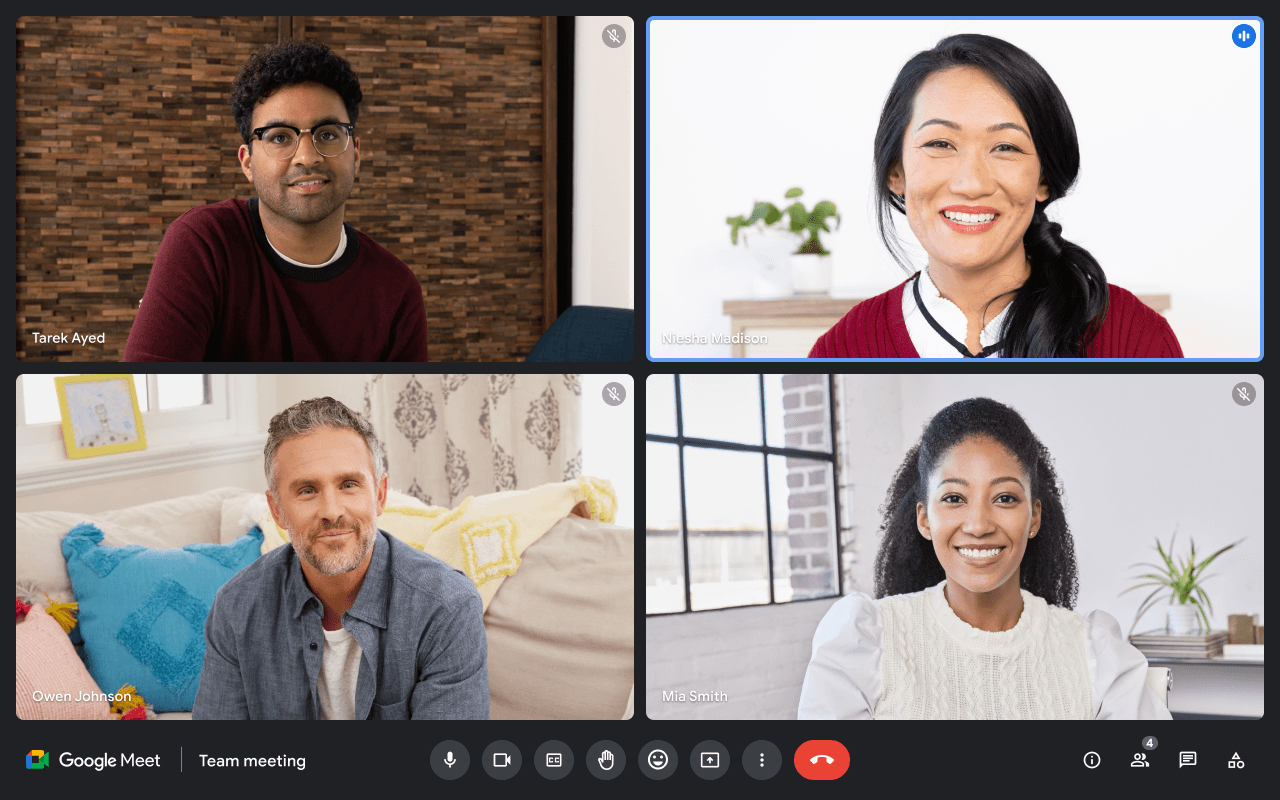

How a Deepfake Video Call Cost Arup $25 Million

A finance worker at UK engineering firm Arup was tricked into transferring $25 million after attending a video call where every participant—including the CFO—was a deepfake.

Nevrial Security Team

Threat Intelligence

January 18, 2026

8 min read

The Attack That Changed Everything

In early 2024, a finance worker at Arup—the British multinational engineering firm behind iconic structures like the Sydney Opera House—received what appeared to be a routine request. An email from the company's Chief Financial Officer requested an urgent video call to discuss a confidential transaction.

What happened next would become one of the most sophisticated corporate deepfake attacks ever documented.

A Room Full of Fakes

During the video call, the employee found themselves in a meeting with several senior colleagues, including the company's CFO. The participants discussed a confidential acquisition that required immediate fund transfers. Everything seemed legitimate—the faces matched, the voices were familiar, and the context made sense.

But every single person on that call was a deepfake.

The attackers had used AI to create real-time video and audio deepfakes of multiple Arup executives. They had studied publicly available footage—interviews, company videos, and social media content—to train their models.

Over the course of the call, the finance worker was convinced to make 15 separate transfers totaling HK$200 million (approximately $25.6 million USD) to five different Hong Kong bank accounts.

How the Attack Unfolded

According to Hong Kong police, the attack followed a sophisticated playbook:

- Initial Contact: The employee received a phishing email claiming to be from the UK-based CFO

- Building Trust: A video call was arranged with multiple "executives" to add legitimacy

- Creating Urgency: The transaction was framed as time-sensitive and confidential

- Executing the Fraud: Multiple smaller transfers were made to avoid triggering fraud detection

The fraud wasn't discovered until the employee later checked with the company's head office.

Lessons from Arup's CIO

In an interview with the World Economic Forum, Arup's Chief Information Officer Rob Greig shared critical insights:

"It's freely available to someone with very little technical skill to copy a voice, image or even a video. After the incident, I was curious so I attempted to make a deepfake video of myself in real time. It took me, with some open source software, about 45 minutes."

Greig emphasized that this wasn't a traditional cyberattack—no systems were compromised, no data was stolen. Instead, it was "technology-enhanced social engineering" that exploited human trust.

Why Traditional Security Failed

The Arup attack exposed critical gaps in enterprise security:

- Email security couldn't detect the phishing attempt because the request seemed reasonable

- Video conferencing platforms have no built-in identity verification

- Financial controls were bypassed because the "CFO" was visually present and authorizing transfers

- Employee training hadn't prepared staff for real-time video deepfakes

The Growing Threat

According to Deloitte's Center for Financial Services, deepfake-enabled fraud could reach $40 billion in losses by 2027 in the United States alone.

The technology has become democratized—what once required state-level resources can now be accomplished with consumer hardware and freely available software.

How to Protect Your Organization

The Arup case demonstrates why organizations need multi-layered verification:

- Hardware-backed identity verification: Use cryptographic proofs tied to physical devices, not just visual confirmation

- Out-of-band verification: Always verify large transactions through a separate communication channel

- Verification protocols: Establish code words or security questions that only real executives would know

- Deepfake detection technology: Deploy AI systems that can identify synthetic media in real-time

- Employee training: Regular training on emerging threats, including realistic simulations

The Bottom Line

The Arup attack proves that seeing is no longer believing. In an era where anyone's face and voice can be convincingly replicated, organizations must move beyond visual trust to cryptographic verification.

As Greig noted: "Audio and visual cues are very important to us as humans and these technologies are playing on that. I think we really do have to start questioning what we see."

Nevrial provides real-time deepfake detection and hardware-backed identity verification for enterprise video calls. Learn how we can protect your organization.