Inside the Deepfake Attack on the World's Largest Ad Agency

Fraudsters used AI voice cloning and YouTube footage to impersonate WPP CEO Mark Read in an elaborate Teams meeting scam.

Nevrial Security Team

Threat Intelligence

January 14, 2026

7 min read

When the CEO Isn't Really the CEO

In May 2024, the head of the world's largest advertising conglomerate became the target of an elaborate deepfake scam. WPP—with a market cap of approximately $11.3 billion and ownership of agencies like Ogilvy, Grey, and GroupM—found itself on the front lines of AI-enabled corporate fraud.

According to an exclusive report by The Guardian, CEO Mark Read detailed the attempted fraud in an email to company leadership, warning others to watch for similar attacks.

The Sophisticated Setup

The attack demonstrated remarkable sophistication in its execution:

- Fake WhatsApp Account: Fraudsters created a WhatsApp account using a publicly available image of Mark Read

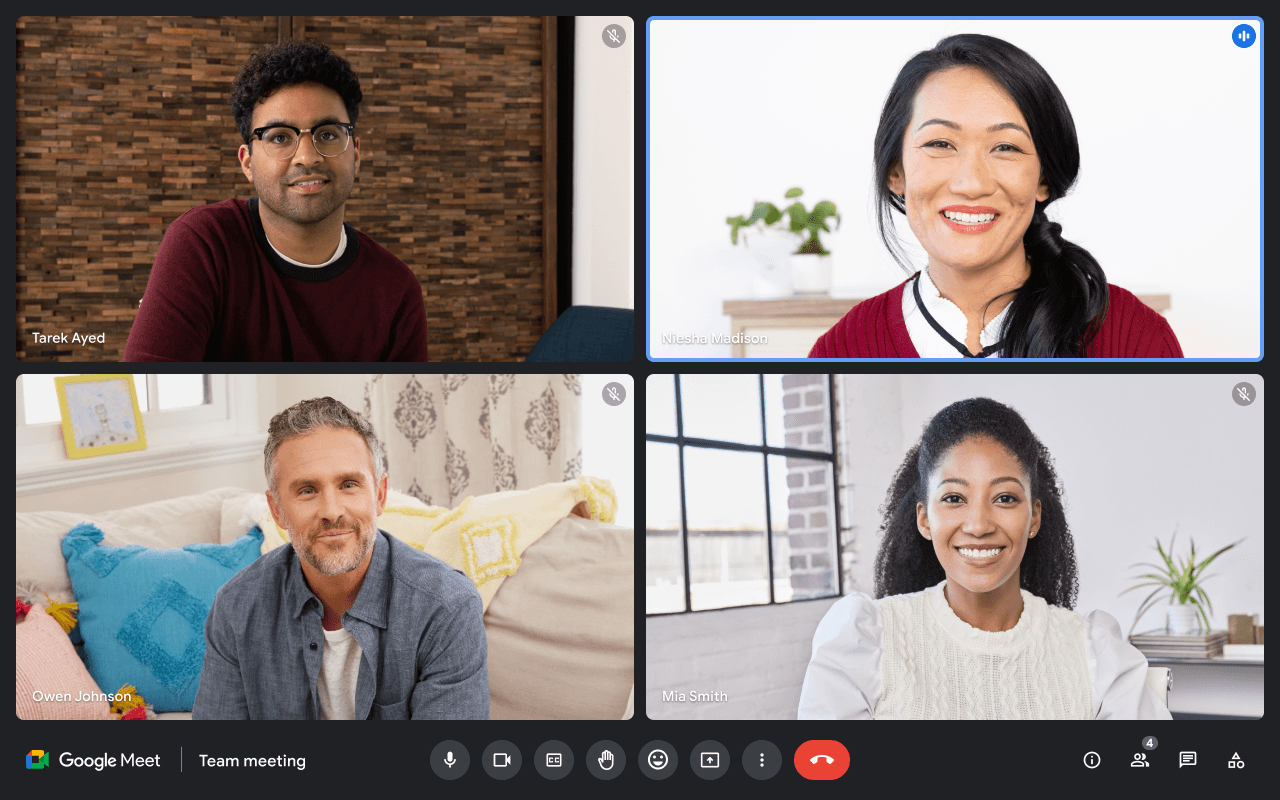

- Microsoft Teams Meeting: They arranged what appeared to be a legitimate video call

- AI Voice Clone: During the meeting, attackers used an AI-generated voice clone of a senior WPP executive

- YouTube Footage: They incorporated real video footage of executives, likely sourced from public interviews and company videos

- Chat Impersonation: Read himself was impersonated via the Teams chat window while staying "off-camera"

The target was an "agency leader" within WPP's network of companies. The scammers attempted to convince them to set up a new business—a ruse designed to extract money and personal details.

The Warning Signs

In his email to leadership, Read identified several red flags that employees should watch for:

"Just because the account has my photo doesn't mean it's me."

He listed critical warning signs: - Requests for passports or personal identification - Money transfer requests - Any mention of a "secret acquisition, transaction or payment that no one else knows about" - Communication through unofficial channels like WhatsApp - Unusual meeting setups where executives stay off-camera

Why Advertising Agencies Are Targets

WPP and similar holding companies present attractive targets for several reasons:

Large Financial Flows

Advertising agencies handle significant client budgets and make regular large transfers for media buys and production costs.

Distributed Structure

With hundreds of agencies across dozens of countries, WPP's decentralized structure creates opportunities for fraudsters to exploit communication gaps.

Public Executives

CEOs like Mark Read are highly visible—giving interviews, appearing at conferences, and maintaining active media presences. This provides ample training data for deepfake creation.

Creative Industry Trust

The advertising industry often operates on relationships and quick turnarounds, potentially making employees more susceptible to urgent requests.

The Irony of AI in Advertising

The attack carries a particular irony: WPP has been actively embracing generative AI. In 2023, the company announced a partnership with Nvidia to create advertisements using AI technology.

As Mark Read himself said in that announcement: > "Generative AI is changing the world of marketing at incredible speed. This new technology will transform the way that brands create content for commercial use."

The same technology that WPP champions for creating marketing content was turned against the company in an attempted fraud.

Industry-Wide Implications

The WPP attack signals a broader threat to corporate communications:

The Trust Problem

Video calls have become ubiquitous since the pandemic. We've been trained to trust what we see on screen. Deepfakes exploit this trained trust.

The Verification Gap

Most video conferencing platforms provide no identity verification beyond the name displayed. Anyone can claim to be anyone.

The Scalability Threat

As The Guardian notes, low-cost deepfake technology has become widely available. Some AI models can generate realistic voice clones from just a few minutes of audio.

WPP's Response

Following the attack, WPP took several steps:

- Leadership Warning: Read's email alerted executives across the organization

- Public Disclosure: The company chose to publicize the incident, helping other organizations learn from it

- Website Warning: WPP added a notice about fraudulent use of its brand name on unofficial websites and messaging services

The company stated: "Please be aware that WPP's name and those of its agencies have been fraudulently used by third parties—often communicating via messaging services—on unofficial websites and apps."

Building Defenses

The WPP case reinforces several critical security practices:

Verify Through Official Channels

If you receive an unusual request, verify it through established company communication systems—not through the channel the request came from.

Question "Off-Camera" Executives

Be suspicious when senior executives stay off-camera but communicate through chat or have others speak on their behalf.

Implement Technical Solutions

Deploy deepfake detection technology that can analyze video and audio in real-time for signs of synthetic generation.

Create Verification Protocols

Establish procedures for verifying high-stakes requests, including pre-arranged code words and multi-channel confirmation.

Train Employees Regularly

Security awareness training must evolve to include deepfake threats. Employees need to understand that video calls can no longer be trusted at face value.

Nevrial's real-time deepfake detection integrates with major video conferencing platforms to verify every participant's identity. Request a demo to see how we protect enterprise communications.